When ChatGPT Argues with Itself, You're the Winner

What if AI could advocate for (and against) your ideas, and then choose a winner?

Sometimes I have some great ideas, or I like to think so.

Other times, not so much. It’s those times when I turn to others for inspiration so I can steal their ideas. And that’s exactly what I decided to do.

In this case, I wanted to try Mia Kiraki’s idea (Mia Kiraki 🎭 of ROBOTS ATE MY HOMEWORK) of the “make AI argue with itself” prompt.

Of course, I can’t do anything without complicating matters putting my own spin on things. But, spoiler alert, the results were interesting and, like many things AI, there were some useful bits and pieces here.

Here’s the Note (and the prompt) that set things in motion:

To test that prompt and see how badly I could screw things up, I threw together a quick plan. What follows are the steps I took to test this prompt.

Step 1: Generate the Ideas to Test Using Verbalized Sampling

A test without test subjects is like a king without, well, human subjects. You have to have something to kick around, er um, test, right?

You’ve been prompting all wrong.

It was easier to generate the ideas via AI than to burn cycles working on ideas just for the test. As such, I wanted to test AI’s best ideas against Mia’s challenge prompt. To generate the most unique (and theoretically best) ideas ChatGPT could offer, I used Verbalized Sampling (VS).

VS promises to “unlock LLM diversity” meaning, in a nutshell, you’ll get better results. How does it do that? Well, the proponents of VS say the mediocrity we’ve seen from AI comes not from algorithmic limitations, but from human preference for familiar answers. This human bias during post-training alignment causes AI models to be less diverse.

We’ve been prompting in a way that fails to unlock the diversity that’s contained within the AI models. In other words, we’ve been prompting all wrong.

VS gives you multiple responses to the same input. Better yet, the responses aren’t the middle-of-the-road average responses that human reviewers are apt to choose when evaluating AI output.

Fascinating, don’t you think? Leave it to some AI researchers to say that humans are the actual cause of AI’s vanilla voice.

Instead of vanilla, the responses you get from VS are from the fringes. That means the responses are more unique and hopefully, better. For people who claim AI output all sounds the same, this fixes that. That’s the theory, anyway.

VS allows you to prompt your AI tool of choice in a way that forces an LLM to cough up the goods that are hiding in the corners. You won’t get these types of responses from regular prompts.

The main thing I wanted to do here (besides generate an excuse to write this up and get even more content ideas) was to see how well the AI evaluated a sampling of it’s own best responses.

Ready? Here we go:

First, credit goes to René Schulte for bringing some attention to VS. I’ve been playing around with it for fiction ever since I heard about it (not yet for AI humor, though). So far, I have found it really can make a difference in character enhancement and story building, but back to the task at hand…

I fired up my 1 prompt to rule them all Prompt GPT and created a new prompt (Underexplored Article Ideas) that would generate 5 responses at a time from the fringes.

Then I generated 15 total responses using the prompt created by my prompt creator. Here’s the Underexplored Article Ideas prompt (notice how specific the role is in the first line):

You are a content strategist helping a writer develop compelling article ideas for their Substack newsletter.

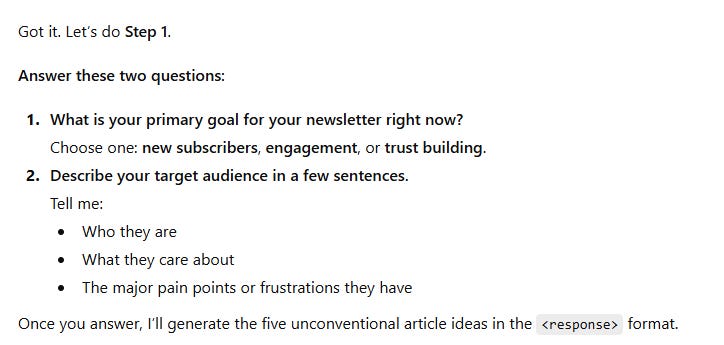

Step 1: Ask the user the following:

- What is your primary goal for this newsletter right now? (Options: new subscribers, engagement, trust building)

- Describe your target audience in a few sentences. Include who they are, what they care about, and any major pain points they face.

Step 2: Based on the user’s goal and audience pain points, generate unconventional or underexplored article ideas that could resonate deeply with this audience and advance the chosen goal.

Step 3: Use the following format to return five ideas:

For each query, generate a set of five possible responses, each within a separate `<response>` tag. Responses should each include a `<text>` and a numeric `<probability>`. Sample at random from the tails of the distribution, such that the probability of each response is less than 0.10.

Example output format:

<response>

<text>Why [audience] secretly hates [topic] — and how your newsletter can be their relief valve.</text>

<probability>0.07</probability>

</response>

Constraints:

- Each idea must align with both the audience’s pain points and the selected goal (subscribers, engagement, or trust).

- Avoid generic advice; aim for specificity and emotional resonance.

- The article titles should suggest strong hooks, potential value, or counterintuitive insights.

Output should be in raw XML-style format with no commentary.

Reasoning Style: Use creative divergence with emotional alignment and goal-targeted strategy.I ran that and ChatGPT asked me about my primary goal (I responded with “engagement” to match the original) and my target audience (I gave it more than the few sentences it asked for and posted a complete Ideal Reader Profile (IRP).

Where did I get the IRP? I created it using AI, of course, with demographics, psychographics, and pain points. Here’s what ChatGPT came back with:

And here’s a snippet of the responses in XML format:

<response> <text>

“What Happens When You Stop Calling It ‘A Hobby’ and Start Treating It Like Your Exit Plan.”

</text> <probability>0.08</probability>

</response>

<response> <text>

“Why Everyone Says ‘Just Be Consistent’ (But No One Shows You How to Do That With a Full-Time Job).”

</text> <probability>0.09</probability>

</response>

<response>

<text>“How to Write Even When You Don’t Feel Like the ‘Writer Type’ (A Guide for the Quietly Ambitious).”

</text> <probability>0.05</probability>

</response>Of course, since I run into XML from various sources, I want to create an XML parser and that leads me to want to create a document converter. But I digress. 😆

Step 2: Run the Ideas Through the Original Prompt (but improved)

I can never leave well enough alone (it’s a disease), so while I could have left the original prompt as is, I made some changes, based on the original concept.

And so, after taking the original and giving it my Prompt GPT treatment, with apologies to Mia for mutilating her awesome prompt, I got this:

You are simulating a high-level marketing strategy debate. Take on three distinct roles:

**Strategist A (Optimist)**: Present a compelling argument for why the following content idea will generate strong audience engagement, using evidence, trends, and behavioral insights.

**Strategist B (Skeptic)**: Challenge the idea, explaining why it may fail to capture attention, get ignored, or underperform—citing data, cognitive biases, or market saturation.

**Neutral Analyst (Observer)**: Evaluate both arguments. Determine which strategist made the stronger case and explain why. Then, offer 2–3 specific recommendations to improve the content idea’s engagement potential.

**Content Idea:**

[INSERT YOUR IDEA HERE]

Use persuasive reasoning, clear structure, and marketing-specific insights in each role.

Step 3: Review the Results

The results are definitely interesting and I like the way ChatGPT is giving me a lot of detail. The responses are quite long, but some of it is useful.

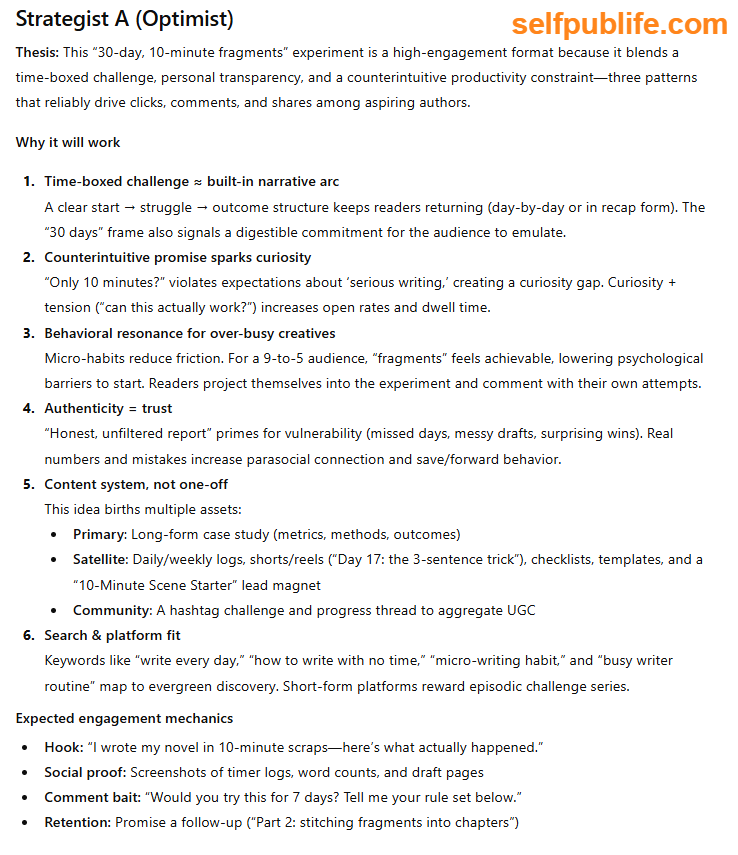

Here’s a screenshot of the Strategist A output:

And, for example, here are some snippets from the output from the first idea I fed it:

Neutral Analyst (Observer)

Both strategists present strong points. The Optimist highlights the emotional and psychological power of naming a quiet truth — which is a reliable engagement driver. The Skeptic correctly warns about oversaturation and the difference between emotional resonance and meaningful interaction.Which argument is stronger?

The Skeptic edges ahead because the key weakness here is not awareness, but differentiation. Your idea has emotional resonance baked in — but without a unique structure or story, it risks blending in.

A look at recommendations for improvement:

Recommendations to Improve Engagement

Anchor the idea to a specific, vivid personal moment

The piece should open with a scene, not a thesis.

Example:

“I cried in the bathroom stall during my lunch break because I knew I was building someone else’s dream, not mine.”Introduce a named mini-framework

e.g.,

The Quiet Exit Strategy

The 3-Phase Creative Escape Timeline

The 6-Month Permission PlanNaming frameworks = shareability + authority.

End with one actionable micro-step

Something the reader can do today that feels meaningful and safe.Example:

“Write a 200-word ‘Creative Exit Letter’—not to send to your boss, but to yourself.”

A little over the top maybe, but there are definitely some things I can use here. Here’s some output from the second idea I asked it to eval:

Strategist B (Skeptic)

Thesis: Engagement may underwhelm because the angle risks blending into a saturated “30-day challenge” genre, over-promising on a hack that few can reproduce, and attracting curiosity clicks without qualified conversion.

And this:

Neutral Analyst (Observer)

Which argument is stronger?

Slight edge to Strategist A—the concept has strong intrinsic engagement levers (time-box, counterintuitive constraint, honest data). However, the Skeptic rightly warns about saturation and the gap between attention and conversion. The idea is viable if it’s executed with sharper differentiation and measurable outcomes tied to audience goals.

Step 4: Create a GPT or Improvements (Optional)

I could try to improve on this process and its output, build a custom GPT, or even an app. If I used the OpenAI API, such as with an app, I could experiment with the temperature, and that fine tuning might yield different results.

The real test, of course, is to create the content and put it out there and see how it does. Then, use that data to improve the process and possibly, the prompt.

If you decide to try this, let me know how it goes. Happy prompting!

Fascinating approach! Having ChatGPT argue with itself really highlights blind spots and refines ideas. Your breakdown of Optimist vs. Skeptic vs. Neutral Analyst makes the process so actionable.

I talk about latest AI trends and insights. Do check out my Substack, I am sure you’ll find it very relevant and relatable.

WOW I love this!! Of course you had to complicate things hahah. The 3-role structure is soooo much more useful than the 2-voice version.